A Smarter AI Assistant with Retrieval-Augmented Generation: A Case Study

"I know people usually better than they know themselves." - Donna

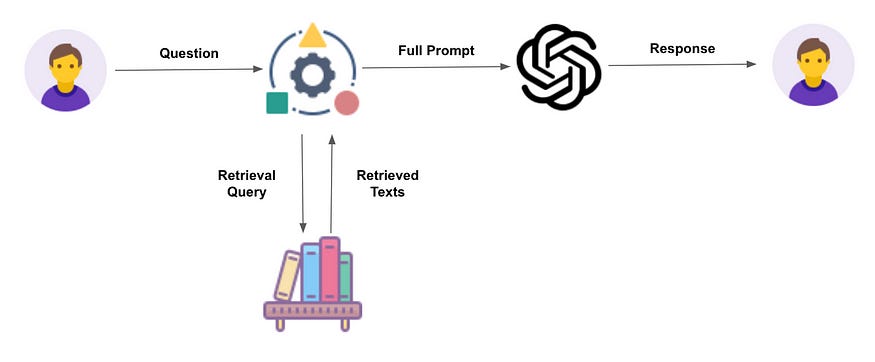

Retrieval-Augmented Generation, or RAG, is one of the hottest topics in AI today. The concept is widely discussed, but implementing it effectively can be more challenging than it seems. For those unfamiliar, RAG is a method that combines the retrieval of relevant data with the generation of responses, allowing AI systems to deliver more accurate and contextually relevant answers.

YourOwn, inspired by the serie Suits, is building Donna—a powerful Whatsapp based AI assistant tailored for SMBs. Donna comes equipped with a suite of tools such as reading documents, setting reminders, and searching the internet. However, despite her capabilities, Donna had a significant limitation: she couldn’t access or utilize company-specific data.

The Vision: Enhancing Donna with RAG

The goal was clear: to integrate a RAG system into Donna, enabling her to access and retrieve company-specific data, thereby making her more useful and relevant to the businesses she serves.

What is RAG?

At its core, RAG consists of two primary components:

Data Retrieval: This involves fetching and storing data from various sources.

Data Searching: This part involves searching through the stored data to find relevant information when needed.

By blending these two processes, RAG allows an AI system to pull specific, relevant data on demand, significantly improving its response accuracy.

The Blueprint: Building a Successful RAG System

Step 1: Understand the Requirements

The first step in building a successful RAG system is to thoroughly understand the project’s requirements. For Donna, this meant considering factors like:

Number of Companies: How many businesses will Donna serve?

File Types: What kind of documents and file extensions will she need to handle?

Latency Constraints: How quickly does the data retrieval and search need to be?

These are just a few of the critical questions that need to be addressed upfront.

Step 2: Identify Integration Points

The next step was identifying where and how to integrate the RAG system with existing infrastructure. In the case of Donna:

Data Source: We agreed to use Google Drive as the primary data source.

Authentication: Implementing OAuth mechanisms was essential to securely access company data.

Folder Selection: There needed to be a functionality for users to select specific folders for Donna to access.

File Handling: The system had to manage file changes, additions, and deletions effectively.

Chatbot Integration: Donna’s chatbot was already built using Langchain, so seamless integration with this framework was crucial.

Communication: Since Donna interacts with users via WhatsApp, integrating with the WhatsApp server was also vital.

Step 3: Build the Population Pipeline

Once the integration points were identified, the next step was building the pipeline to eventually populate the database. This involved:

Fetching the Data: Retrieving data from the identified sources, such as Google Drive, using secure access protocols like OAuth. This step is crucial for ensuring that the system has the necessary data to work with.

Chunking and Embeddings: Splitting documents into manageable chunks and generating embeddings (vector representations) to make the data searchable.

Vector Database Setup: Setting up a vector database to store these embeddings. A vector database is a specialized database designed to handle the complexities of storing and searching through vectorized data, which is crucial for efficient and accurate retrieval in a RAG system. I decided to use Pinecone as it is a fully managed, cloud-based Vector database with a reasonably large free tier. A tutorial I highly recommend where you can use Pinecone together with Langchain can be found here.

Step 4: Implement the Semantic Retriever

The semantic retriever is the core component that powers the search functionality within the RAG system. For Donna, I implemented a hybrid retriever that combines two powerful methods: similarity search and a BM25 retriever. This combination enhances the accuracy and relevance of the search results by addressing the limitations of each method when used independently.

Similarity Search

Similarity search is a technique that uses vector representations (embeddings) of documents to find the most similar pieces of information based on a query. In this context, each document is converted into a vector—a numerical representation that captures the semantic meaning of the text. When a query is made, the system searches for the vectors that are closest to the query vector in the high-dimensional space. This approach is highly effective for capturing the overall context and meaning of the query and the documents.

However, similarity search can sometimes miss specific keywords or exact phrases because it focuses on the broader semantic context rather than individual words. This is where the second component, BM25, comes into play.

BM25 Retriever

BM25 is a type of information retrieval model that is particularly good at keyword-based searches. It ranks documents based on the presence of query terms, considering factors like term frequency and document length. BM25 excels at finding documents that contain exact matches of the query terms, making it complementary to similarity search.

By combining BM25 with similarity search, we create a hybrid retriever that leverages the strengths of both methods. The hybrid retriever first performs a similarity search to identify documents that are semantically close to the query. Then, it uses the BM25 retriever to ensure that documents containing specific keywords or phrases are also considered. The results from both searches are merged, and duplicates are removed to provide a set of unique, highly relevant documents.

Step 5: Test, Iterate, and Refine

Building a RAG system is as much an art as it is a science. After the initial implementation, we created a custom evaluation set based on real-world questions derived from documents provided by our building partners. Through continuous iteration and refinement, we significantly improved the system’s performance.

The Result: A Smarter, More Capable Donna

After numerous iterations and refinements, Donna became significantly smarter. Armed with the ability to access and retrieve company-specific data, she now offers more tailored, contextually relevant assistance to SMBs. The addition of RAG has transformed Donna into a more powerful tool, making her an invaluable asset to the businesses she serves.

Conclusion: The Power of RAG

Implementing RAG was a challenging but rewarding experience. It demonstrated the immense potential of combining retrieval and generation in AI, particularly for applications that require access to specific, contextually relevant data. As Donna continues to evolve, the lessons learned from this project will serve as a foundation for future advancements in AI-driven personal assistants.

For a basic implementation of populating a Pinecone database with Wikipedia articles and searching through it, please visit my repo.

For those looking to implement similar systems, remember that the key to success lies in understanding your requirements, identifying integration points, building a robust data pipeline, and being prepared to iterate continuously. The journey can be complex, but the results are well worth the effort.

Bart, Maarten en Elkan, good luck!

Michiel